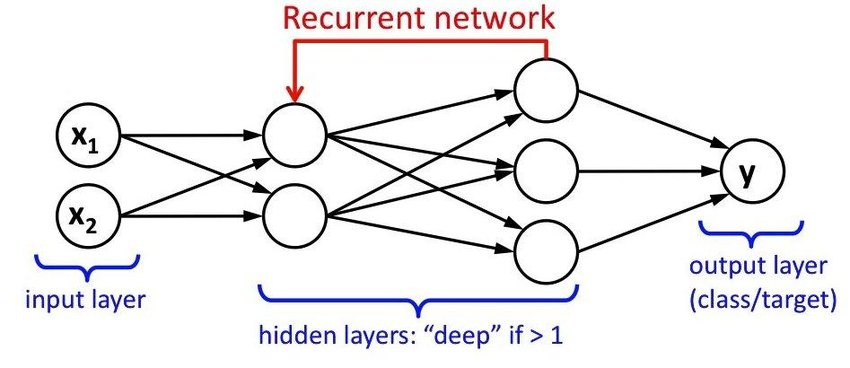

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to handle sequential data, such as time-series data, text, and speech. Unlike traditional neural networks, RNNs have a memory-like architecture that allows them to preserve information from previous inputs and use that information to make predictions.

RNNs consist of a repeating module called a "hidden state," that takes the previous hidden state and the current input to produce a new hidden state. This hidden state is then used to make predictions about the next output in the sequence. By using the hidden state to store information about previous inputs, RNNs can effectively process sequences of arbitrary length.

One of the key advantages of RNNs is their ability to model long-term dependencies in sequential data. This makes them ideal for tasks such as language translation, speech recognition, and text generation. For example, in machine translation, an RNN can learn to translate a sentence from one language to another by taking into account the context of the entire sentence, rather than just individual words.

RNNs have been used in a variety of applications, including natural language processing, speech recognition, and computer vision. For example, in natural language processing, RNNs can be used to classify texts into different categories or to generate new text based on a given input. In speech recognition, RNNs can be used to transcribe speech into text or to translate speech from one language to another.

One of the challenges in training RNNs is the vanishing gradient problem, where the gradients become very small and the network is unable to learn effectively. To address this, researchers have developed variants of RNNs, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), which use gating mechanisms to control the flow of information through the network and mitigate the vanishing gradient problem.

Top 5 Models That Use RNN

1- Long Short-Term Memory (LSTM) networks: LSTMs are a popular type of RNN that are designed to overcome the vanishing gradient problem by using memory cells to store information.

2- Gated Recurrent Units (GRUs): GRUs are a simplified version of LSTMs that offer similar performance with fewer parameters, making them computationally more efficient.

3- Bidirectional RNNs: Bidirectional RNNs process sequences in both forward and backward directions, allowing the model to incorporate information from both the past and future.

4- Stacked RNNs: Stacked RNNs are RNNs that have multiple hidden layers, allowing them to learn increasingly complex representations of the input data.

5- Attention-Based RNNs: Attention-based RNNs use attention mechanisms to focus on the most relevant parts of the input sequence, enabling the model to make predictions based on the most important information.

Python Example

import numpy as np

from keras.models import Sequential

from keras.layers import SimpleRNN, Dense

# Generate dummy data with 1000 samples

# Each sample has 100 timesteps and 32 input features

timesteps = 100

input_features = 32

output_features = 1

# Create input data X with random values

X = np.random.random((1000, timesteps, input_features))

# Create target data y with random values

y = np.random.random((1000, output_features))

# Build the model using Keras Sequential model

model = Sequential()

# Add an RNN layer to the model with 32 hidden units

# The input shape is specified as (timesteps,

input_features)

model.add(SimpleRNN(32, input_shape=(timesteps,

input_features)))

# Add a dense layer with 1 unit to make the final prediction

model.add(Dense(1))

# Compile the model using the RMSprop optimizer and MSE loss

function

model.compile(optimizer='rmsprop', loss='mse')

# Train the model using the fit function

# Train on the input data X and target data y

# Train for 10 epochs and use a batch size of 32

model.fit(X, y, epochs=10, batch_size=32)This code uses the Sequential model from the Keras library to build a simple RNN with 32 hidden units. The input data, X, is a 3D tensor with 1000 samples, each with 100 timesteps and 32 input features. The target data, y, is a 2D tensor with 1000 samples and 1 output feature.

The RNN is followed by a dense layer with 1 unit, which is used to make the final prediction. The model is compiled using the Root Mean Squared Propagation (RMSprop) optimizer and the mean squared error (MSE) loss function.

Finally, the model is trained using the fit function, which trains the model on the X and y data for 10 epochs, using a batch size of 32.

In conclusion, Recurrent Neural Networks (RNNs) are a powerful tool for processing sequential data and have found a wide range of applications in natural language processing, speech recognition, and computer vision. With the development of advanced variants such as LSTMs and GRUs, RNNs continue to be an active area of research and have the potential to revolutionize many fields in the years to come.